Accelerating the Use of Generative AI in Healthcare with Precision Regulation

The healthcare industry is at the cusp of a technological revolution, driven by rapid advancements in artificial intelligence (AI). The sense of urgency of utilizing AI to innovate care delivery is all too real with clinician burnout at record levels, with cognitive burden and low-value tasks as contributing factors. Among these innovations, generative AI has emerged as a powerful tool with the potential to transform how healthcare providers deliver care, make decisions, and interact with patients. However, as with any transformative technology, the promise of generative AI comes with significant challenges—particularly in the realms of regulation, ethics, and patient safety.

To fully harness the benefits of generative AI in healthcare, there must be a delicate balance between fostering innovation and implementing precise, effective regulation. This balance is essential to ensure that the technology can be adopted safely and responsibly, while still enabling the creativity and progress that are crucial to its success.

The Promise of Generative AI in Healthcare

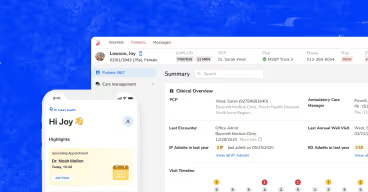

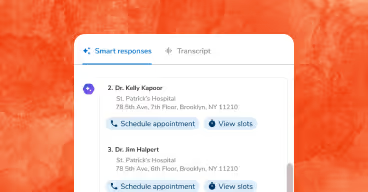

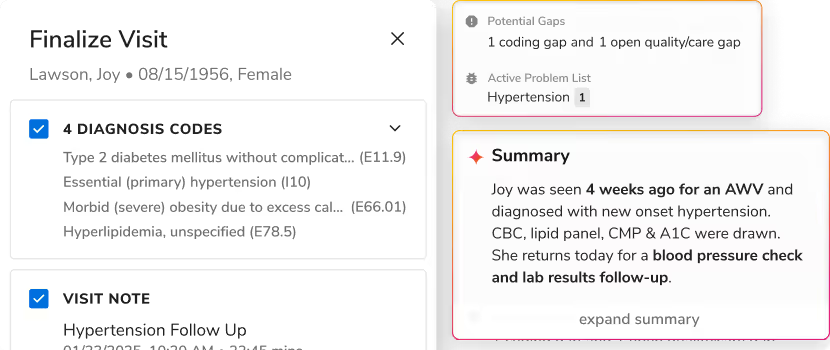

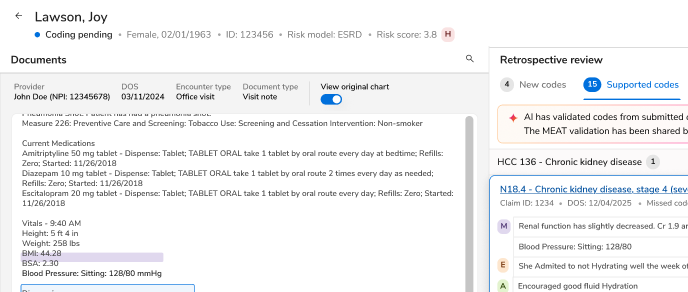

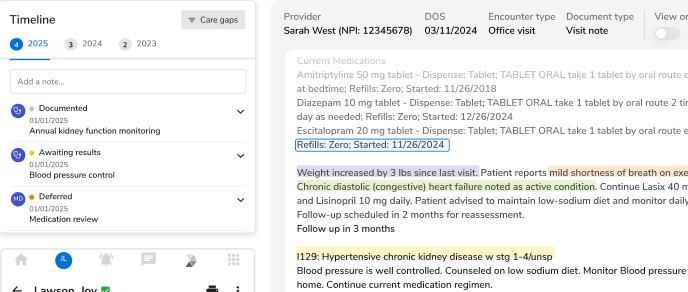

Generative AI, which refers to AI systems capable of creating new content—such as text, images, or even clinical recommendations—offers a myriad of opportunities in healthcare. For example, AI-driven tools can assist in clinical documentation, reducing the administrative burden on providers and allowing them to focus more on patient care. Generative AI can also support diagnostic decision-making by analyzing vast amounts of medical data to provide insights that might be overlooked by human practitioners.

>Moreover, generative AI has the potential to personalize patient care in unprecedented ways. By analyzing patient history, genetics, and other data, AI can help tailor treatment plans that are more effective and less prone to trial-and-error approaches. This level of personalization could lead to better outcomes, reduced costs, and a more patient-centered healthcare system.

The Regulatory Challenge

While the potential of generative AI in healthcare is immense, it also presents unique regulatory challenges. Healthcare is a highly regulated industry, with patient safety, privacy, and ethical considerations at the forefront of any new technology adoption. The introduction of generative AI adds layers of complexity, particularly in ensuring that the technology is both accurate and trustworthy.

One of the key challenges in regulating generative AI is the "black box" nature of many AI models. These systems can generate outputs based on vast and complex datasets, but the rationale behind their decisions is often opaque. This lack of transparency raises concerns about accountability, especially in cases where AI-generated recommendations could directly impact patient outcomes.

Another challenge is ensuring that generative AI complies with existing healthcare regulations, such as the Health Insurance Portability and Accountability Act (HIPAA) in the United States, which governs the privacy and security of patient data. AI systems must be designed to handle sensitive data in a way that meets regulatory standards, while also providing the flexibility needed for innovation.

Precision Regulation: A Path Forward

To navigate these challenges, a framework of precision regulation is needed—one that is both rigorous and adaptable. Precision regulation involves creating rules and guidelines that are specific enough to ensure safety and compliance, yet flexible enough to allow for innovation and growth.

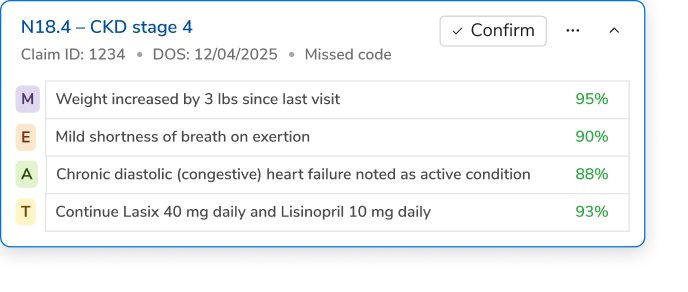

One approach is to develop clear guidelines for the development and deployment of generative AI in healthcare. These guidelines could include standards for transparency, requiring AI systems to provide explanations for their recommendations, and traceability, ensuring that AI-generated outputs can be linked back to the data and algorithms that produced them. This would help build trust among healthcare providers and patients alike, as well as provide a basis for accountability.

Regulators should also work closely with industry stakeholders, including healthcare providers, AI developers, and patient advocacy groups, to ensure that regulations are informed by practical insights and real-world needs. This collaborative approach can help identify potential risks early and create a regulatory environment that supports innovation while safeguarding patient interests.

Additionally, there should be a focus on continuous monitoring and post-market surveillance of AI systems. Given the dynamic nature of AI (i.e., “learning” or being continuously “trained”), ongoing assessment is crucial to detect and address any issues that may arise as the technology evolves and is used in diverse traditional clinical settings and non-traditional settings such as retail clinics, hospital-at-home, virtual care, etc. This could involve leveraging AI itself to monitor and evaluate its own performance, creating a feedback loop that enhances both safety and effectiveness that is transparent and reliable.

Balancing Opportunities and Challenges

The integration of generative AI into healthcare is a balancing act between seizing opportunities and addressing challenges. On one hand, AI has the potential to revolutionize patient care, improve outcomes, and reduce costs. On the other hand, the complexities of regulation, ethics, and patient safety cannot and should not be ignored.

Achieving the right balance requires a commitment to precision regulation—one that is informed by collaboration, guided by transparency, and driven by the imperative to protect patient welfare. By carefully navigating these challenges, the healthcare industry can unlock the full potential of generative AI, fostering an era of innovation that benefits providers, patients, and society as a whole.

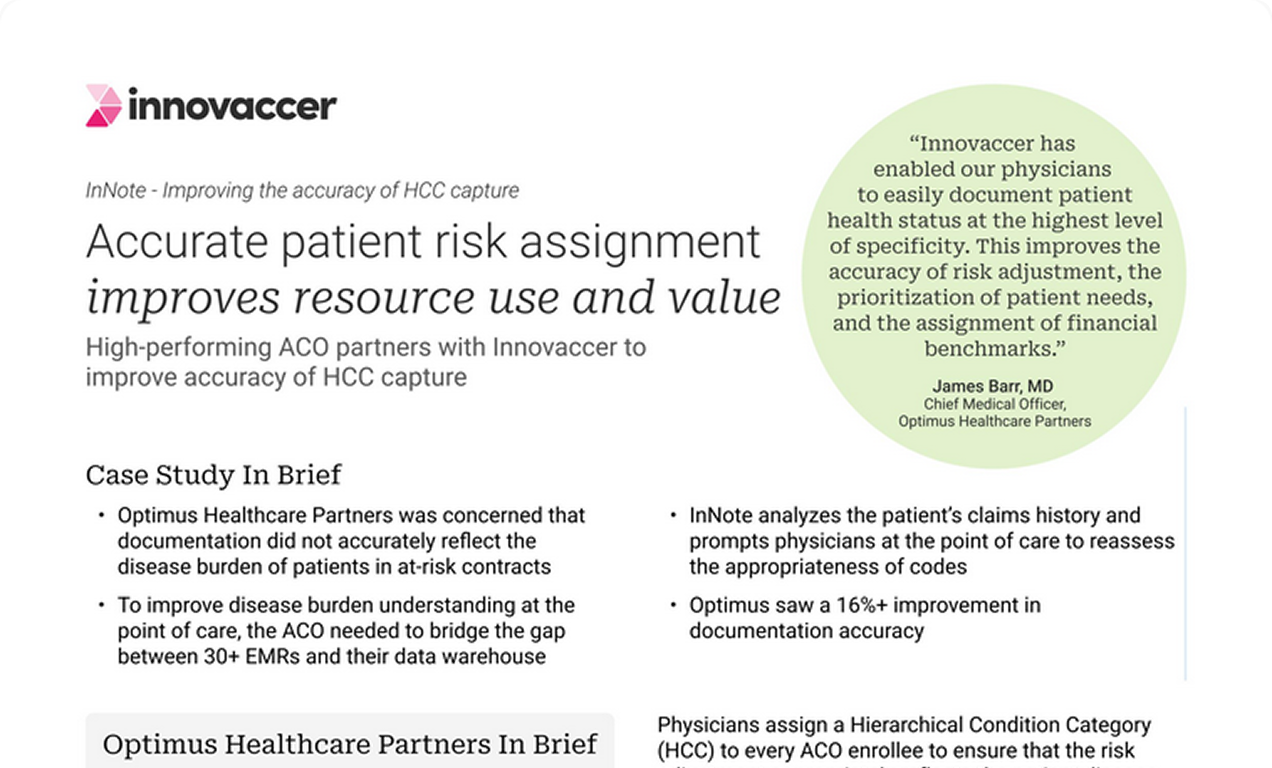

In the end, the goal is not just to regulate AI but to create an environment where innovation and regulation work hand in hand, ensuring that generative AI can be a force for progressing health and wellbeing. It is with this mindset that we are investing in purposeful generative AI to solve the challenges that physicians face when managing care.

.png)

.avif)

.svg)

.svg)

.svg)