Why Trust is Public Health’s Most Critical AI Infrastructure

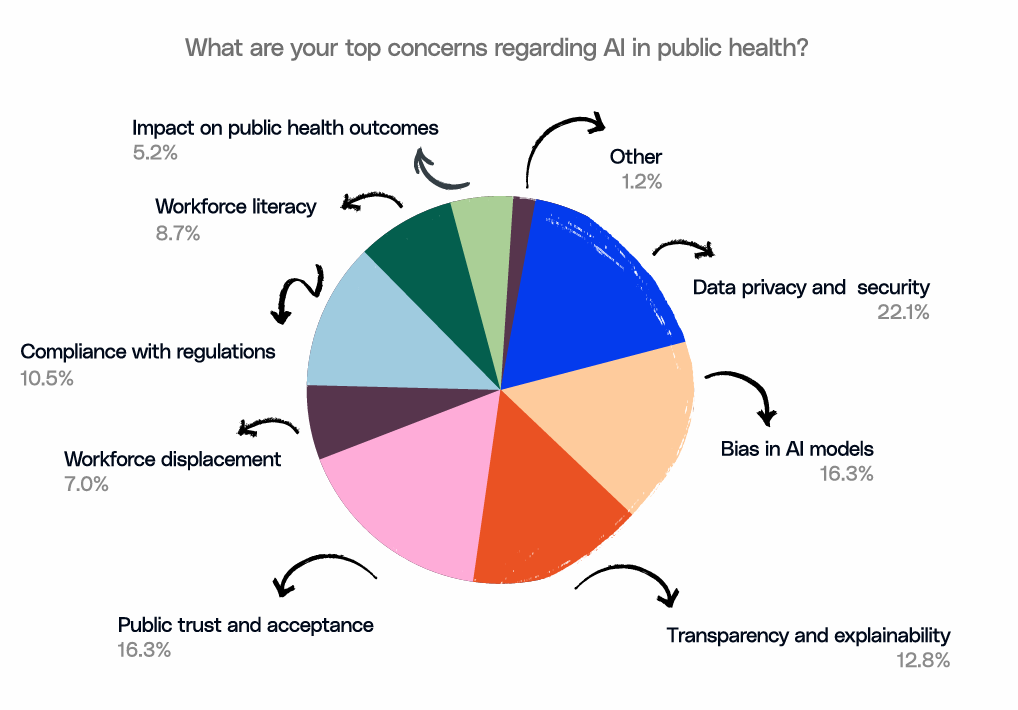

Our last blog underlined a pressing truth: AI in public health is advancing faster than the governance structures meant to safeguard it. This gap poses a real threat to community confidence and long-term sustainability. But beneath this governance gap lies an even more foundational issue: trust. As per a recent survey, the most urgent concerns among public health leaders are data privacy, bias, transparency, and public trust.

These aren’t technical hurdles but challenges of legitimacy and accountability. In this piece, we explore why trust has emerged as the true infrastructure for public health AI, and what it will take for agencies to build systems that communities can rely on. Let’s zoom in on why trust and not technology is emerging as the defining challenge for the future of public health AI.

The Trust Gap: Communities Won’t Engage with Systems They Don’t Understand

Public health has always relied on community trust, whether it be vaccination campaigns, disaster alerts, or health advisories; none work without public acceptance. AI raises the stakes.

In the recent survey-based report, "How State and Local Public Health Leaders Can Promote AI and Data Governance.", “public trust and acceptance” ranked as a top concern for 16.3% of respondents, placing it on par with concerns about bias in AI models (16.3%) and just behind data privacy and security (22.1%). This signals that agencies believe communities may reject AI-enabled systems they perceive as opaque, extractive, or inequitable.

The Equity Imperative: AI May Narrow Public Health Gaps

As per the latest research, AI models trained on public clinical datasets often show substantial demographic imbalances, with Hispanic and Black patients making up only about 2.8% and 7.3% of samples, respectively. Such underrepresentation has real consequences: models trained on skewed data tend to deliver lower diagnostic accuracy and weaker performance for these communities, deepening the very gaps public health aims to close.

These risks echo what leaders voiced in the survey. Respondents cited bias in AI models as one of their top concerns. This highlights a broader fear that algorithms could reinforce existing disparities in disease burden, access, or resource allocation. And with over 65% of departments reporting they are not fully ready to integrate diverse datasets, there is a real possibility that AI systems will be built on incomplete or unevenly representative information.

The downstream impacts are easy to imagine:

- Early-warning systems that fail to detect risks in marginalized neighborhoods

- Chatbots that misinterpret culturally specific health questions

- Resource-allocation tools that disproportionately benefit already well-resourced areas

Equity, then, cannot be an afterthought. It must be deliberately engineered into AI from the start. This can be done by including representative datasets and getting the data reviewed by clinicians. Only by building these safeguards upfront can AI become a tool that closes public health gaps rather than widening them.

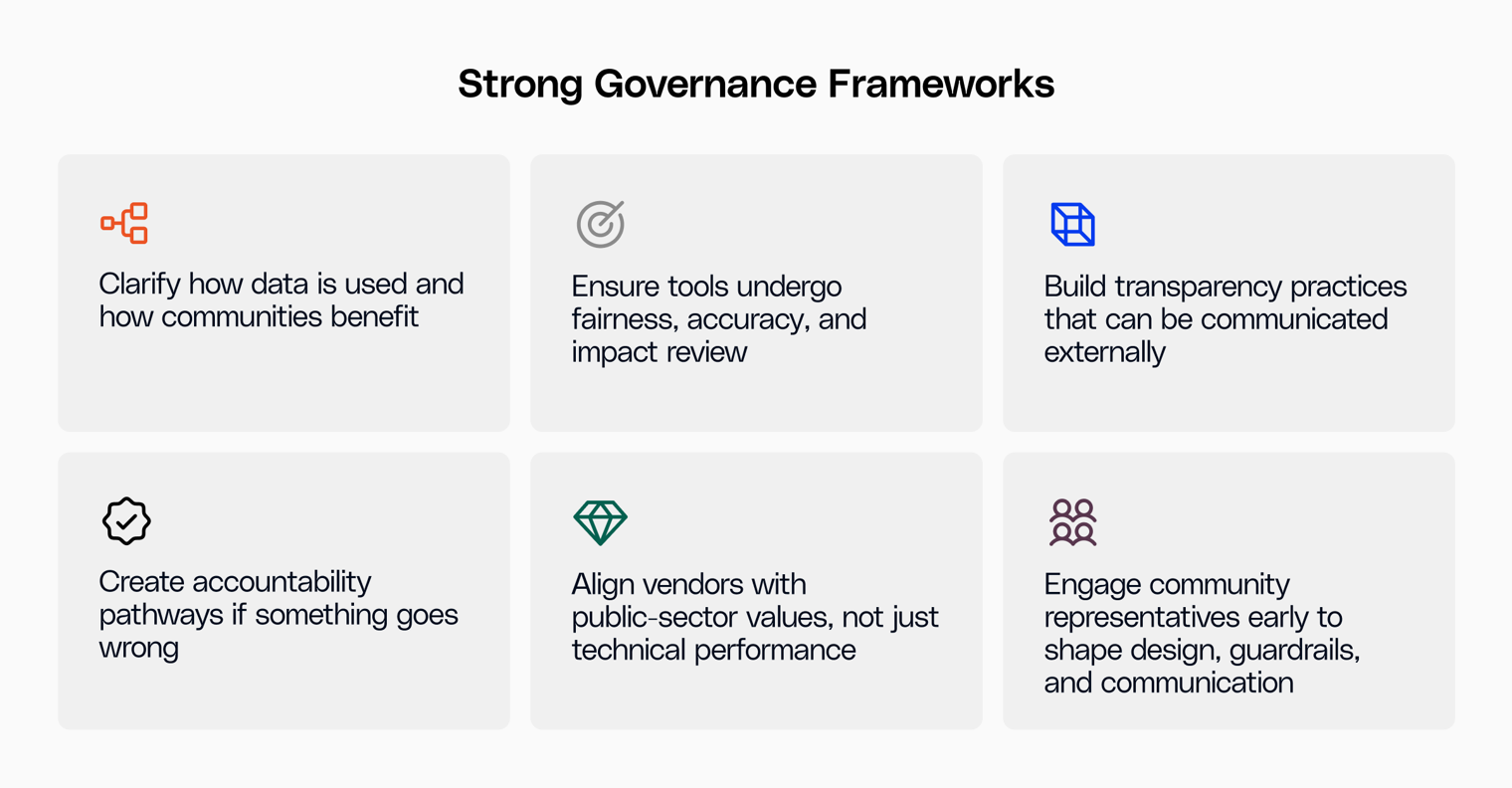

Why Governance Is the Missing Link

AI adoption is happening, with or without structure. The survey makes this clear: more than half of departments launched AI pilots in the past year, yet 60% said that they don’t have fully developed policies in place.

This imbalance creates fragmented norms:

- Different teams apply different standards for risk assessment

- Vendor transparency varies widely

- Ethical guardrails are inconsistent

- No shared mechanism ensures community oversight

Most organizations perceive governance as just another bureaucratic measure. But, it isn’t. Governance is the mechanism by which trust and equity will become real.

The Path Forward: Trustworthy AI Starts with People, Not Models

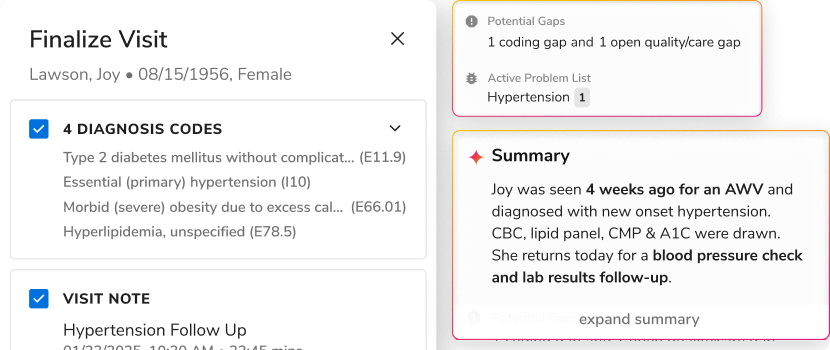

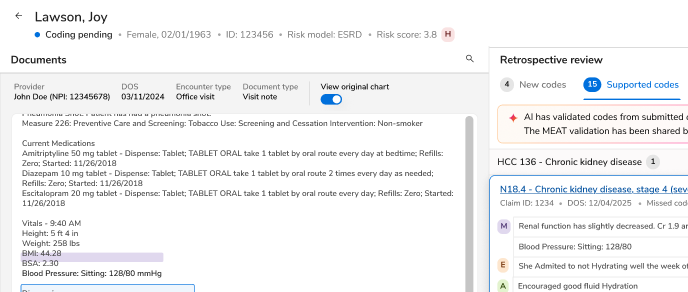

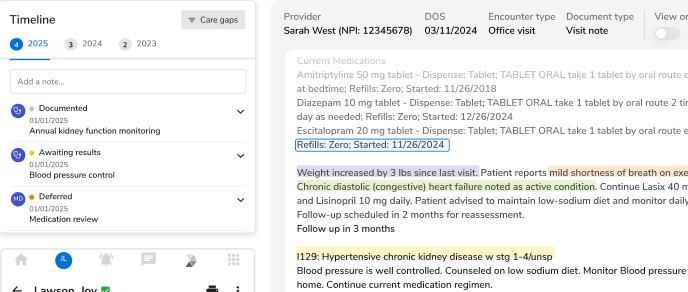

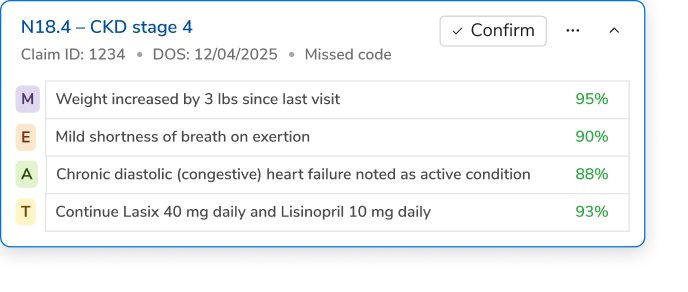

The survey’s stories of emerging use cases, such as integrating fragmented systems or summarizing case notes, show that AI’s promise is real. But fulfilling that promise requires shifting the mindset from “Can we use AI?” to “Can the public trust the AI we use?”

Here are four principles public health agencies can adopt immediately:

1. Make transparency a default, not an exception

Publish what data is used, how models are evaluated, and what safeguards exist.

2. Embed community voice into AI workflows

Invite community health workers, local leaders, and advocacy organizations into model review and pilot design.

3. Operationalize equity in every step of the AI lifecycle

Require diverse training datasets, test for disparate impacts, and ensure that equity reviews carry the same weight as performance metrics.

4. Build workforce confidence through literacy, not dependency

Training must accompany every deployment. When staff understand the tools, they can better safeguard fairness.

A Moment of Choice

AI in public health is no longer speculative. Counties and states are using it today to predict outbreaks and support frontline staff. The question now is not whether agencies will adopt AI, but whether they will adopt it in a way that strengthens the bond between public institutions and the communities they serve.

If public health leaders treat trust and equity as the core metrics of AI success instead of just focusing on speed or efficiency, not speed, then AI can truly widen the circle of who benefits from data-driven health systems instead of just becoming yet another lever that deepens existing divides.

This is the moment to choose. And the agencies that ground their AI strategies in transparency will build a more trusted, equitable, and resilient public health future.

Learn how your agency can build a robust governance foundation. Read the report now.

.png)

.png)

.svg)

.svg)

.svg)