Sara: The Next Frontier in AI for Healthcare

Sara: The Next Frontier in AI for Healthcare

Artificial Intelligence (AI) has advanced over the past four decades, evolving from simple predictive models to sophisticated generative AI. In the past year, generative AI has brought the marvels of AI technology to millions, perhaps billions, by introducing natural language in both its input and output.

Generative AI thrives on probability algorithms, predicting the next sequence of words based on the colossal body of text it's trained on. The result is nothing short of magical. However, its intelligence is only as robust as:

- The quality and scope of its training data.

- The precision of the questions asked.

- The guidelines provided for output generation.

- Its ability to discern what to prioritize and what to overlook.

The Healthcare Challenge: Navigating the Maze of Generative AI

The application of all-purpose generative AI in healthcare presents unique challenges that must be addressed for the industry to benefit fully from this new tool. Those challenges include:

The potential for AI hallucinations: When a large language model (LLM) perceives patterns or objects that are nonexistent or imperceptible to human observers, according to IBM , this can lead to “hallucinations” or misleading outputs. For instance, a lawyer using ChatGPT for legal documentation encountered this issue when the generative AI cited fictitious cases. Read more about this incident.

The quality of training data: Healthcare is a highly nuanced, complex, and constantly evolving field of research and practice that demands extremely sophisticated and timely knowledge and information. The vast data pool AI models are trained on might not always be clinically validated, leading to inaccuracies in medical reasoning if not double-checked.

The complexity of healthcare concepts: Generative AI models can manage medical terminology, but they falter when it comes to interpreting intricate healthcare concepts. As an example, if you ask OpenAI or Google’s Gen AI models such questions as “What is diabetes? How is it caused? What are the symptoms? How do you best take care of it?”—those models might generate good answers. But if you give the AI models the patient’s data (let’s say loaded as a C-CDA or HL7 or FHIR) and ask if the patient has diabetes or not, they will fail miserably.

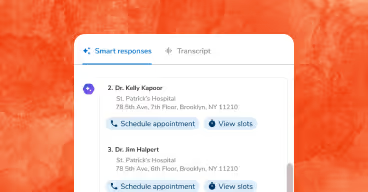

The unrealistic need for prompt engineering: Anyone who plays with free-to-use proprietary models like OpenAI or Google soon realizes that the way you ask the question is as important or more important than the data the model is trained on and even the quality of the model itself. However, training millions of healthcare workers and patients on how to code queries is not an option. AI models must be robust enough and engineered so anyone at any level of expertise can use them.

The lack of transparency: Healthcare has undergone decades of submitting feedback data to predictive models. Clinicians, however, will not use or trust the output from those models because the answers do not include explanations or transparency. A “black box” approach will not improve confidence. To gain a clinician’s trust, a predictive model that predicts 30-day readmission for a discharged patient, for example, must also include reasons or factors on which this model is basing its results. Generative AI models will also be viewed as even more of a black-box approach than predictive models unless the facts of the case and citations are presented to clinicians.

Introducing Sara: AI for Healthcare by Innovaccer

Taking all of these challenges into account, Innovaccer built a healthcare-specific AI solution called "Sara", designed to help meet some of healthcare’s greatest operational needs. Here’s how Sara addresses these challenges:

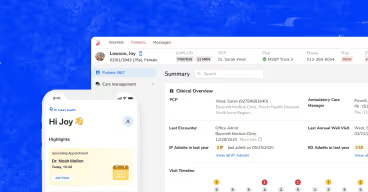

Data Proficiency: With experience in unifying records for over 60 million individuals in the US, Innovaccer's platform is adept at understanding diverse healthcare data. We know how to unify, clean, and interpret healthcare data. As a result, Innovaccer is able to go beyond the context of the setting (for example, a patient-provider conversation in an exam room or a call center interaction) to include other rich sources of data, such as input for the generative AI model.

Comprehensive Training: Sara is well-versed in over 10 million clinical terms and a myriad of administrative and reimbursement concepts, enabling a deep understanding of healthcare intricacies. The following outlines the extent of healthcare training Sara goes through:

- Clinical terminology: Over 10 million clinical terms ranging from disease, medication, labs, vitals, problems, complaints, allergy, immunization, etc., across 50+ coding systems like ICD, CPT, SNOMED, RxNorm, NDC, CDC, CMS, etc.

- Administrative concepts: Sara includes over 10 million healthcare providers, 200,000 healthcare facilities, and 1000 health plans and understands who they are, their identification and databases, and how they operate.

- Reimbursement concepts: Sara is trained on over 50 episodes of care, 300 cost centers, 1000 revenue center codes, 1000 DRGs, 30,000 CPT codes, and more than 1000 reimbursement formulas like PMPM or IP / 1000 and understands how the cost of care is measured with various cuts and how reimbursements in fee-for-service and value-based care models take place.

- Quality of care concepts: With over 3,000 value-sets like 200 ICD-10 codes that describe diabetes or 400 NDC codes that constitute a diabetic medication, and over 500 quality measures, Sara understands the quality of care measurements and/or the absence of them.

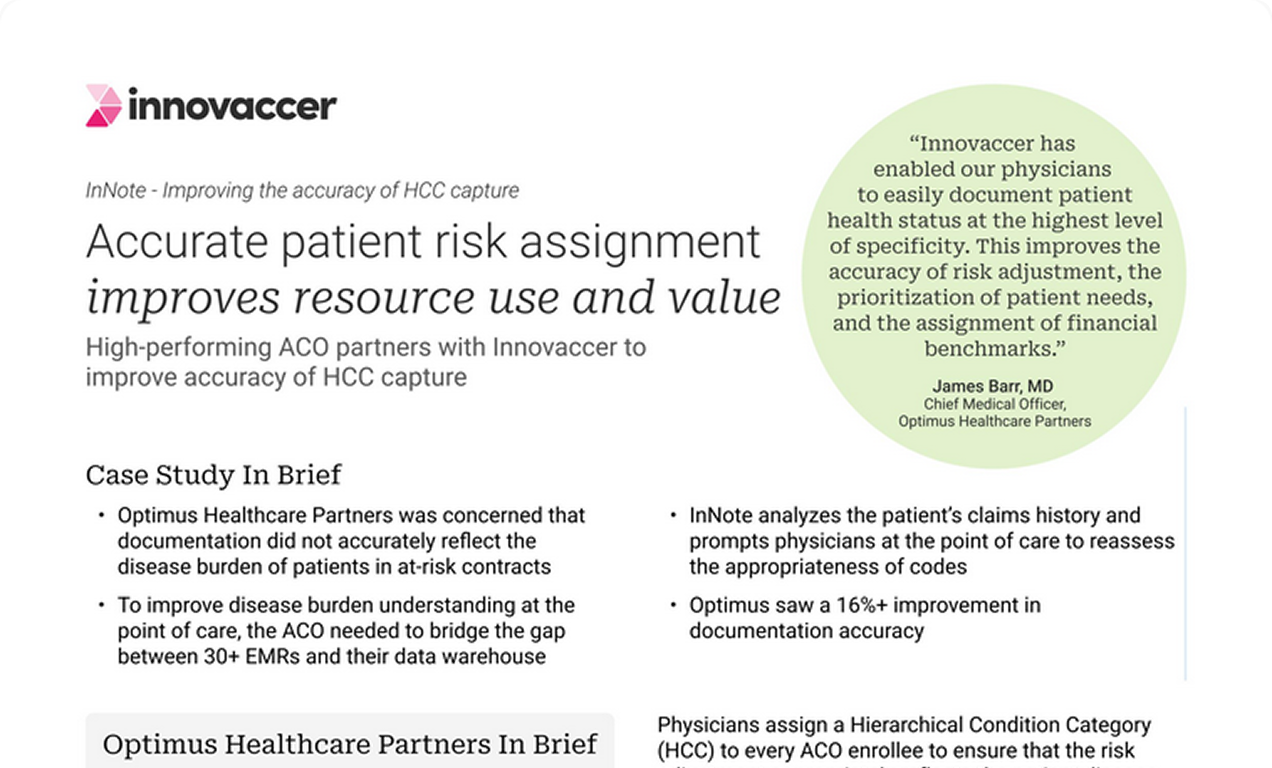

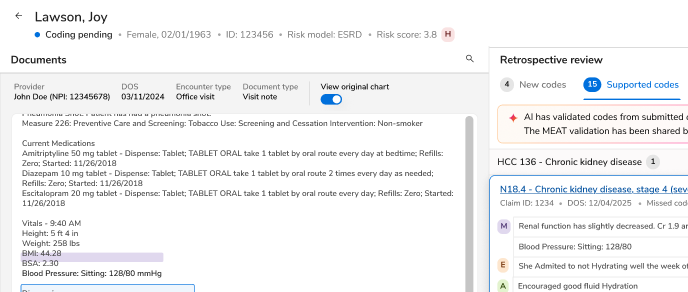

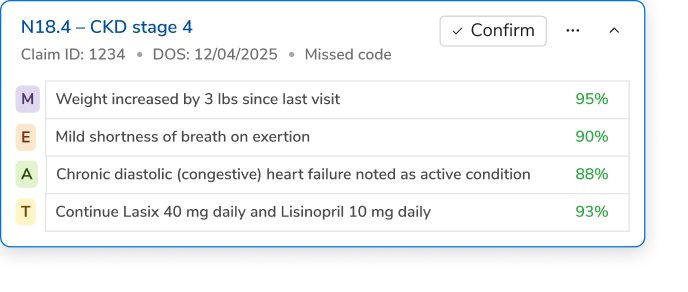

- Risk and coding: Sara understands more than 30,000 ICD-10 codes and their interplay with 200 financial risk categories (or HCCs) or 100 clinical risk categories as well as suspect conditions based on evidence found in clinical or claims data.

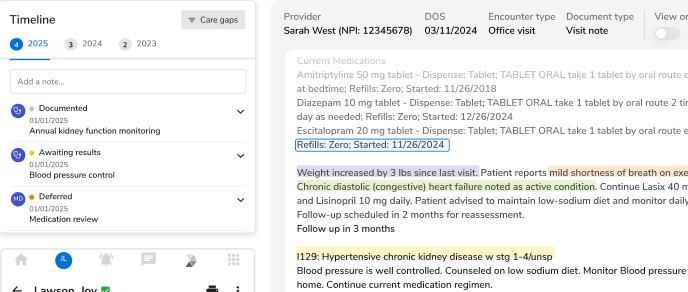

Continuous Learning through User Feedback: Sara evolves through user interactions, refining its capabilities with each user edit. If a SOAP note was generated by Sara, but a few lines were edited by a clinician before putting it back in their EMR, Sara knows it and becomes more intelligent with that feedback over time.

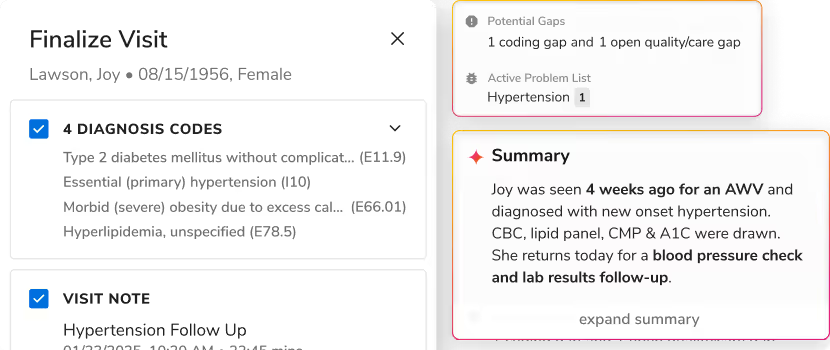

Transparency: Unlike other generative AI models, Sara provides clear explanations for its outputs, ensuring reliability and trustworthiness in clinical settings. For example, if Sara generates a SOAP note based on a patient-provider conversation, it will not generate the SOAP note as well as a full transcript of the conversation, including any clinically relevant facts it acquired from the conversation and prior clinical data. As another example, if Sara is providing an aggregated data report based on an English query from a user, it will also share information on how it interpreted the query with operands, logical operators, and filters with an SQL query.

Accountability: It is very important that Sara doesn’t provide misleading output or hallucinate. Thus, it is important for Sara to know if it has all the necessary information to answer a question or, if not, to say “no” or “I don’t know.” For example, if a SOAP note is generated by listening to a patient-provider conversation in an exam room, and if the clinician did not discuss many lab results or other objective assessments, the “Objective” part of the SOAP note will be empty. In another example, if Sara is asked to generate an insight using the query from a user, and in that query, Sara does not understand a clinical term, it will state it cannot understand that term.

Conclusion: AI for Healthcare is Here

Technology investments are big decisions, especially when those decisions affect workflow and organizational performance so directly. In healthcare, such decisions are even more important because lives are at stake, and the healthcare industry is so complex and dynamic.

The past 25 years of technological innovation have provided some important lessons for healthcare. To be a powerful force for productivity improvement (rather than a detriment), technology must be healthcare-specific, user-friendly, and reliable. If a given platform is missing any of these ingredients, it will not be adopted with enthusiasm.

Generative AI models have the potential to revolutionize operational, administrative, and clinical performance. However, technology leaders and users must choose wisely when deciding which models and tools to incorporate. That’s why we’re so excited about introducing Sara to healthcare—because we know it’s one of a kind.

Sara in Action: Revolutionizing Healthcare Practices

Provider

From a provider’s perspective:

- Enhanced trust: Sara avoids hallucination by relying on factual data, thereby earning the clinician's trust.

- Utilizing data in background: Incorporating a patient's clinical history, Sara offers more nuanced diagnoses.

- Enhanced care and reimbursement support: Sara aids clinicians in navigating the complexities of patient care and reimbursement by providing quality and coding gaps among other important information.

| Use-Case | Innovaccer Sara | Other Ambient Scribe Technology Providers |

| Transcription |

Yes |

Yes |

| Microphone / Mobile voice |

Yes |

Yes |

| Generate SOAP Note |

Yes |

Yes |

| Says no if it can’t see it in data |

Yes |

Very few |

| Shows clinically relevant facts to clinician |

Yes |

No |

| Uses prior clinical history as well for differential diagnosis |

Yes |

No |

| Alerts on quality or coding gaps |

Yes |

No |

Analyst

From an Analyst perspective:

- Data query accuracy: When compared to other AI models like Open AI GPT-4, Sara exhibited superior accuracy (83%) in understanding and executing complex healthcare queries.

- Understanding healthcare nuances: Unlike other platforms, Sara is proficient in understanding healthcare-specific terms and formulas.

Innovaccer used a body of over 1,000 queries in English, typically healthcare data input submitted by executives and analysts, along with the desired SQL query output. These could range from concepts like cost, quality, risk, membership, network, etc. and could be as simple as “Show me PMPM by line of business” to “How many and which members have diabetes, high blood pressure, and have recently been in an emergency department more than twice in the last six months?”

To be even more fair with proprietary all-purpose Gen AI models like Open AI GPT-4, we embedded our data model and healthcare terminology with their models to create a level playing field. The results of accuracy are below, i.e., % of queries in which desired SQL query output was achieved.

| Open AI GPT-3.5N (Innovaccer data model and healthcare terminology embedded) |

Open AI GPT-4

(with Innovaccer Data Model and Healthcare Terminology embedded) |

Innovaccer Sara | |

| Innovaccer’s 1000 healthcare data query corpus (It comprises concepts like cost, quality, risk, membership, network, etc.) |

53% |

72% |

83% |

When compared to other reporting / BI platforms, Sara outshines healthcare specificity, a more important and time-consuming task saving hours for analysts.

| Innovaccer Sara | Microsoft PowerBI | Salesforce Tableau | Domo | |

| Understand tables and column names |

Yes |

Yes |

Yes |

Yes |

| Build a SQL query from guided drop-downs or keywords |

Yes |

Yes |

Yes |

Yes |

| Build a SQL query from English query |

Yes |

Yes |

No |

Somewhat |

| Understand healthcare terms |

Yes |

No |

No |

No |

| Understand healthcare formulas |

Yes |

No |

No |

No |

Learn more about Sara and contact the Innovaccer team about implementing it in your workflow.

.png)

.avif)

.svg)

.svg)

.svg)