The ABC of Integrated Care: Smart Integration over Just Integration

Isn’t “work smarter, not harder” something we’ve heard on multiple occasions? It may seem just like any other wry motivational poster, but come to think of it, it could also be a good mantra for healthcare- especially when we look forward to a data-driven infrastructure.

With more and more data sources in healthcare springing up, it’s becoming a complex landscape. There is a multitude of data sources and stitching them together to unlock critical insights is going to require the right tools and more importantly, the right data. Several platforms for integrating data sources exist and are flourishing, but in the era of value-based care- where even a sliver of data could make or break- it’s time to switch to smart integration.

The first step- smart data

Yes, the first step that smart integration requires is smart data. How many times have you had to face the frustrating challenge of searching for something you were sure you remembered keeping in a certain place before dismantling a room apart- only to discover what you were looking for on a tiny shelf in a little compartment.

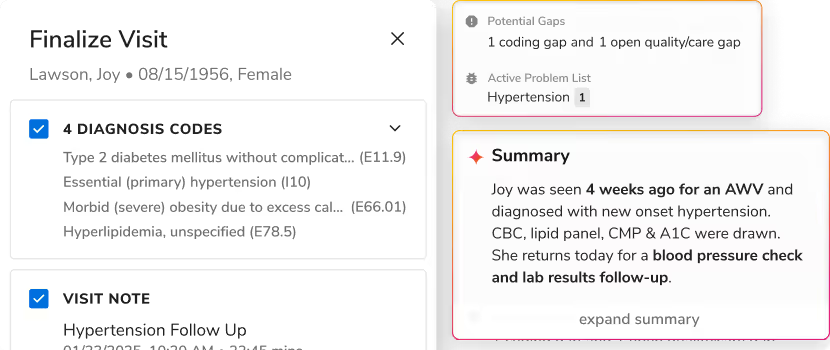

The same happens in healthcare. Healthcare organizations store petabytes of data but often can’t access the right information at the right time. Data in healthcare is much more than just storing it for a rainy day- it’s about organizing, analyzing, and presenting this processed information that answers the looming questions at the point of care. Here’s how organizations can be smart about their choices and gather smart data:

- Relying on data standards like HL7 and DICOM that will allow disparate systems to be interoperable.

- Investing in clinical documentation improvement and ensuring the integrity of gathered data.

- Engaging in optimized data management strategies that would deliver integrated, error-free data at the right time.

Transforming unstructured data into an asset

This seems like a good option- taking a good look at our choices. But when we dive deeper, we realize that what we have is a lot of unstructured data. This unstructured data is often unusable in its current form and requires some work to make it usable.

What we’re dealing with right now is a tremendous amount of EMRs, X12 835/837 files, HL7 feeds, CCDA documents, ADT feeds among many other sources. Add this to the images, paper records, and lab test results- and we have a plethora of structured and unstructured data sources.

It’s time to move away from the paradigm of structured data entries and the structured way of looking at gathered information. We are at that point in healthcare where the technology we have is sophisticated enough to allow us to do a lot with unstructured data repositories. The focus has to be shifted to enhance unstructured data mining potential, unstructured data within structured headers, and smart data repositories that can deal with all kinds of data formats.

True, raw data alone can’t lead to systematic improvements. Big data has to be transformed to ‘better data.’ Enter: a healthcare data lake to make it all smarter.

Switching to smart integration with data lake

A data lake, in simple terms, is an open reservoir that can ingest tremendous amount of healthcare data, often on a Hadoop-framework. The data lake can receive and store various types of data while structuring and mending it to suit specific use cases. For example, by using a data lake as a repository, a healthcare organization can store all the medications and clinical treatments a patient has ever undergone.

Now here’s the best thing about a data lake. It doesn’t apply any analytic algorithm up front or performs any transformation. Whenever the data is required to be pulled out, the user can retrieve the information from the data lake. For instance, after storing data if a provider would like to know if his patient was ever prescribed a specific medication, they can just apply the particular transformation and filter out results.

Usually, data lake is structured on a Hadoop Distributed File System which can accommodate data from disparate sources and works with MapReduce. This data is then led ahead to extract, load and transform (ETL) methods for collection and integration of data, which is later processed by Spark – a simple, analytical framework.

What is the key to building a value-based data lake?

- Fast and seamless loading: Loading should not create development bottlenecks for data ingestion pipelines, rather it should allow any type of data to be loaded seamlessly in a consistent manner. Data ingestion pipelines should be fast, automated and manage target structures and not demand to build the target schemas before loading.

- Flexibility and discovery: The data lake has to be flexible and not rigid to a particular technology pattern or behavior. It should provide an agile development environment and have flexible data refinement policies in place.

- Quick data transformation: The data lake should deploy quick data transformation techniques and should have the capability to rapidly implement ready-to-use transformations.

- Analyze, balance, and control: The data lake post-integration should be able to provide accurate statistics after analyzing the data and utilize these insights into creating better processes with integrated value.

- Ready on-the-fly: The data lake should be able to transform integrated data, provide real-time operations, monitor those operations, and rectify errors and broken transformations with real-time alerts on new data arrivals.

Progressing with APIs for data exchange

Ever since the development of APIs for healthcare data, there have been several opportunities that enable data pooling from multiple sources like EHRs, mobile applications, and devices. Plus, post the development of HL7 FHIR, which is ideal for API-based data exchange, there are new integration and exchange mechanisms that allow providers to access data in a standardized manner- at the source of truth only.

In the coming days, healthcare data lake will be easily able to ingest incoming sources of data with APIs. This will be able to make it easier for external systems to push their data on to the ETL pipelines and provide frameworks to easily configure and test connectors to pull data, allowing more and more data sources to be integrated together.

The road ahead

We are at the very beginning of the era of data-driven care. This means that healthcare might be laying the groundwork for years to come and could learn a thing or two from other industries. One fact remains true: the amount of healthcare data integrated and exchanged today is greater than ever, and will only continue to rise, and integration in such an ecosystem requires quality. Data in healthcare is big and messy enough as it is and the lack of strategic planning will lead to confusion, time-taking workflows, errors, and only a longer route to value-based care. Data that is readily available and has compelling stories to tell- that’s the dream!

To know more about our work with healthcare data integration and state-of-the-art data lake, get a demo.

For more updates, subscribe.

Meet us at the upcoming IHI National Forum 2017 in Orlando, Florida at booth #106 and know how we can assist you on your journey to value-based care.

.png)

.avif)

.svg)

.svg)

.svg)