Data Pulse: The Neural Network of Healthcare Data Observability

Healthcare data management faces significant challenges in today’s digital landscape. At the forefront is the explosive growth in data volume, complexity due to diverse formats like HL7, CCDs, X12, and CCLF, and a variety of data sources, including claims, clinical, provider, and patient data. Additionally, varying data arrival schedules complicate timeliness, while maintaining accuracy and completeness across all data streams requires a delicate balancing act.

These challenges create a ripple effect across healthcare organizations, compromising data quality, hindering efficient decision-making, and ultimately impacting patient care. The challenge becomes even more apparent as healthcare data is not always immediately consumable for all use cases, such as analytics, KPI dashboards, applications, and reporting.

This raises an important question: Can we trust the insights derived from this data to inform decision making? The answer depends on the transparency and reliability of data as it moves through various stages of the healthcare data platform.

The following examples illustrate how data issues across various stages of the propagation impact downstream consumers:

| Stage | Type of Issue(s) | Possible Impact on Data Consumers |

|---|---|---|

| Data Acquisition | Schema Mismatch |

|

| Delayed Data |

|

|

| Data Parsing | Missing values (e.g. diagnosis codes) |

|

| Duplicate Records |

|

|

| Data Standardisation | Inconsistent Data Formats |

|

| Incorrect Data Mapping (e.g medications wrongly mapped to NDCs) |

|

|

| Unstandardised Codes |

|

|

| Misaligned data |

|

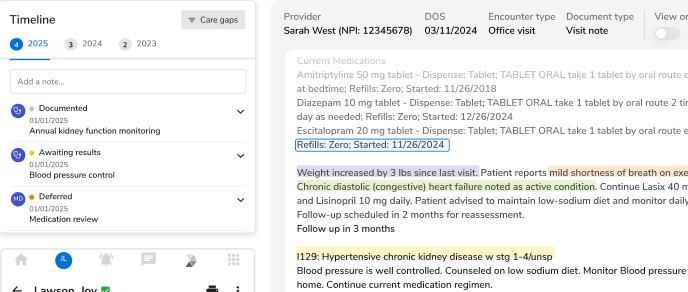

To address these issues, healthcare organizations need robust data integration, standardized validation and processing, advanced data governance and quality control, and scalable infrastructure and analytics.

Ensuring data accuracy, timeliness and consistency throughout its journey is critical. Providing clear visibility into the data lifecycle helps answer questions like "Where is the data?", "Has the data been transformed correctly?", and "What is the quality at each stage?". This transparency builds trust in the value generated by data consumers, empowering confident, data-driven decisions in healthcare.

Data Trust and Transparency: The Role of Quality and Observability

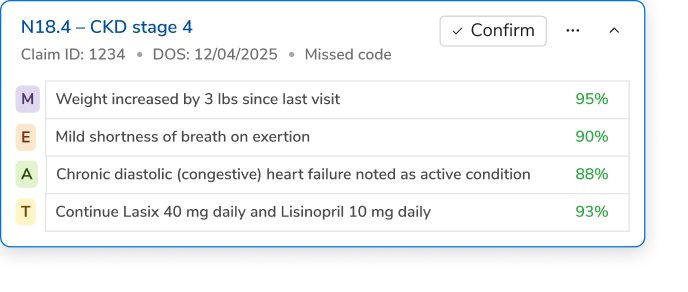

Trust in data increases with better data transparency and mature data observability solves this exact problem. AI-enabled pattern recognition checks and real-time observability enable platforms to catch anomalies like source driven delays, schema mismatch and partial data at an early stage, ensuring trustworthy data for patient care. A "fail fast, shift left" approach identifies issues before they escalate, while proactive data quality controls—such as automated validations and circuit breakers—prevent errors like duplicate patient records or invalid procedure codes, ensuring compliance and reducing risks. Audit trails, data lineage, and data catalogs and dictionaries offer full visibility into data movement, empowering users to trace its origins, resolve issues quickly, and make informed decisions. With real-time insights into the ETL process, data operations teams can monitor data flow, detect anomalies, and act immediately, building user trust and improving the reliability of healthcare applications.

How Data Pulse Solves the Challenge of Data Trust: Enabling the Shift from Reactive to Proactive

Data Pulse Capabilities Framework

Metadata: Powering Validations and Spotting Anomalies

Metadata is unlocked across all levels—structural, descriptive, and operational—with data from multiple sources tagged with rich business context to provide a clear view of data lineage through a business-centric lens. This multi-layered metadata powers essential checks for timeliness, completeness, and consistency across all formats—delimited, HL7, X12, CCLF, and CCDs. The platform automatically enforces relevant data quality rules from a central library, ensuring accurate and compliant ingestion from the start. Data stewards and experts maintain full control, associating datasets with key data categories for deeper insights and governance.

Data Sensors and Circuit Breakers: The Guardians of Data Integrity

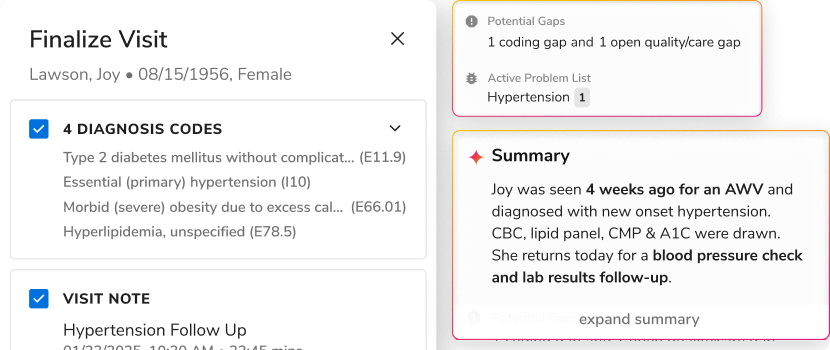

Data sensors are automated checks that continuously monitor data quality across multiple dimensions, ensuring that data in motion is up to standard, while circuit breakers act as safety mechanisms that halt ingestion to prevent downstream impact. These dynamic tools play a critical role in maintaining data integrity throughout the ETL process, where even a small error can snowball into significant issues like incorrect patient care decisions, faulty analytics, or compliance failures.

Imagine a scenario where the platform ingests clinical data in HL7, claims data in X12, and patient summaries in CCD format. This data powers a BI dashboard that tracks service utilization for chronic care patients. If a misstep such as an invalid diagnosis code slips through unchecked, it could result in misleading trends on the dashboard, prompting providers to recommend inappropriate treatments.

Data Pulse mitigates these risks by validating every data transformation with Data Sensors. Whether handling clinical systems (e.g., HL7) or claims data (e.g., X12), Data Pulse ensures that required fields are complete, codes are standardized, and mappings are accurate, preserving data integrity every step of the way. With full visibility into potential downstream impacts, users are empowered to trust their data and make informed, confident decisions.

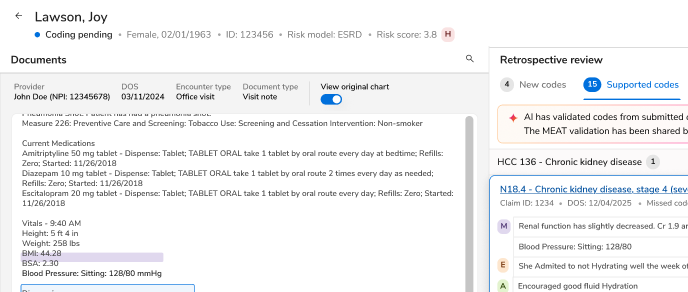

Complete Data Lineage: Unlocking Full Visibility and Control

| Where is the data? | With the lineage capabilities of Data Pulse, users can pinpoint the current status of every data batch at any stage—whether it’s in L0 (raw), L1 (parsed), or L5 (BI dashboard). |

| When will data reach its destination? | Data Pulse features include real-time alerts and status updates to give users visibility into potential bottlenecks or delays in data processing. It ensures the SLAs are met for all business-critical use cases and predicts the estimated time to destination for every batch of received data. |

| Missing values (e.g. diagnosis codes) | Incorrect HCC scores, which are used to calculate risk adjustment payments under MA. Incorrect insights on patient risk, leading to suboptimal care recommendations. |

| What happened to the data? | If a data batch fails or produces unexpected results, operations teams need to know exactly what went wrong. Data Pulse provides detailed logs and anomaly reports to help teams understand why certain data was rejected or failed to pass validation. |

| What is the quality of the data? | Data Pulse provides automated data quality checks and allows users to configure thresholds based on specific datasets or business rules. Data quality at every stage is accessible by users to ensure they are working with reliable data. |

Data Pulse offers real-time alerts and insights into data failures, delays, or quality issues, enabling teams to respond quickly and reduce downtime. It helps the operational teams and end-users answer these critical questions to ensure data is moving through the system as expected and maintaining quality.

Central DQ Rules Catalog

The centralized rule library fosters collaboration, allowing both business and technical users to define and manage reusable, source-agnostic rules for validation, standardization, and quality checks. With parameterized rules that can be invoked as needed, the framework ensures flexible, consistent data governance.

Data stewards have controlled access to manage rule applicability, create custom rules, and scale the catalog for new sources—all while maintaining full version control and traceability. Any DQ rule bypass requires approval, with audit logs and comments providing complete transparency for stable, governed ingestions.

Alerts and Reports: Staying Ahead of Data Quality Issues

Data Pulse enables robust alerting and reporting, designed to keep users informed of any data quality or execution failures across the entire data pipeline. Whether it’s an ingestion issue, a DQ rule violation, or an anomaly detected at any stage, the platform triggers real-time email and Slack alerts to notify the relevant teams immediately.

Users can customize alert preferences to receive notifications tailored to their needs, minimizing alert fatigue. Alerts can be configured for specific stages, directing actionable insights to the appropriate destinations where issues arise.

By keeping users informed with clear, actionable information, the Alerts & Reports feature of Data Pulse empowers teams to take control of data quality, reducing the risk of errors and ensuring data integrity throughout the healthcare platform.

In conclusion, Data Trust is not a one off thing, once the trust is established it has to be maintained on an ongoing basis for every data refresh going through the system, ensuring data quality and observability in healthcare ETL platforms is not just a technical necessity—it's a strategic imperative. With Innovaccer's Data Pulse, robust data quality controls, real-time insights, and transparent data lineage are building trust in the data behind dashboards, applications, and critical decisions. Users can now count on reliable data to drive outcomes, meet compliance, and deliver top-tier patient care.

.png)

.png)

.svg)

.svg)

.svg)