Data Fidelity and Latency: All things Clinical

The Evolution of the Healthcare Data

Data fidelity is the accuracy with which the data qualifies and demonstrates the characteristics of the source. And, with the emergence of AI and real-time patient monitoring systems, it has become even more crucial, particularly in detecting threats through advanced security measures and AI-powered threat detection platforms.

For example, stock ticker data may have a different level of accuracy and refresh rate at a Bloomberg terminal compared to an online web trading station. Signal transmission systems have been dealing with data fidelity challenges for ages.

Understanding Key Concepts

Data Fidelity vs. Accuracy

Though data fidelity and accuracy are frequently used interchangeably in any healthcare discussions, they are represented as distinct and additional aspects of data quality.

On one hand, data quality refers to how accurate, precise, and reliable the collected information is, to the extent that healthcare professionals can trust and use this data to diagnose patients, prescribe treatments, and create care plans.

For example, a high-fidelity ECG recording captures even the complex waveform patterns of each heartbeat, including any minor fluctuations that can be clinically crucial.

On the other hand, accuracy focuses on the authenticity of the data points, ensuring that the measurements truly represent the actual psychological parameter being monitored.

In practical terms, a patient monitoring system might have high fidelity by capturing blood pressure readings every minute with detailed waveforms, but if the device is improperly calibrated, these readings may lack accuracy despite their high fidelity. This distinction is crucial for healthcare professionals as it helps in both monitoring and decision-making.

Fidelity vs. quality - Fidelity is the quality of faithfulness or loyalty.

Data quality refers to the degree at which the data is reliable and thus plays a vital role in maintaining data integrity. The quality of these data depends on several key characteristics. First, data must be complete, meaning it contains a substantial portion of the necessary information required. Followed by unique, avoidable, or unnecessary entries that could skew results or waste storage.

While the consistency and validity of data representation are essential, ensuring that information is presented and formatted uniformly throughout the dataset is also important. These elements work together to create a robust foundation for data-driven decision-making and analysis.

The relationship between data fidelity and data quality is particularly significant because enterprise-level quality decisions rely heavily on accurate, reliable data. It creates a foundation for robust quality management, where each element reinforces the other to ensure optimal business outcomes.

Organizations that recognize this connection are better positioned to make informed decisions and maintain high standards across their operations.

Modern Healthcare Data Architecture

High-Fidelity Data and Latency in Healthcare

High-fidelity data and data latency are the terms used to describe data that is accurate and reliable and is perhaps the most helpful, informative, and desired data among healthcare professionals. These data help in providing in-depth, detailed precision that helps in identifying patterns in patients' conditions leading to improvements in predictive analytics and better future outcomes.

Data latency, on the other hand, refers to the delay or the time lag in the data transmission, i.e., from the data being generated or sent to the time when it’s received or processed. This refers to the time taken for a message to travel from one place to another.

While data latency and fidelity often seem to correlate, are independent factors in quantifying the complexity of deliverables while ascribing to that particular healthcare vertical.

As we go through the fidelity and latency requirements for various applications of data, it becomes important to assess the data models and the skills required to build the systems. Let's begin this journey by illustrating the categories of healthcare verticals with respect to fidelity/latency and deep diving into each.

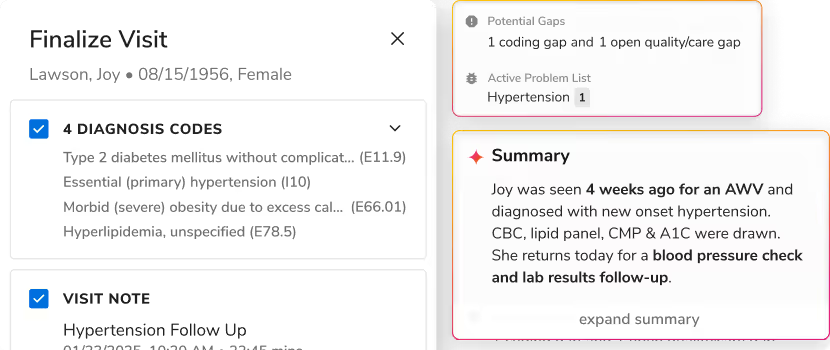

EMR/EHR

As providers interact with EMRs in ER and inpatient settings, EMR systems need to provide data with very high fidelity to record the heart rate or blood pressure, and it needs to be qualitative and time-sensitive. Also, the refresh rates of such data need to be very high, as a quick response to any change would be warranted.

Such systems are extremely complex to build and take time to mature in their capabilities. The resources or entities at the data model level need to capture high-fidelity data at a very fine granularity.

EMR/EHR attributes:

- Extremely High Fidelity

- Very low latency and time-sensitive

- Highest skill set requirements to build/maintain

- Finest granularity in the data model

- Slowly Changing Dimensions (SCD) Level 6

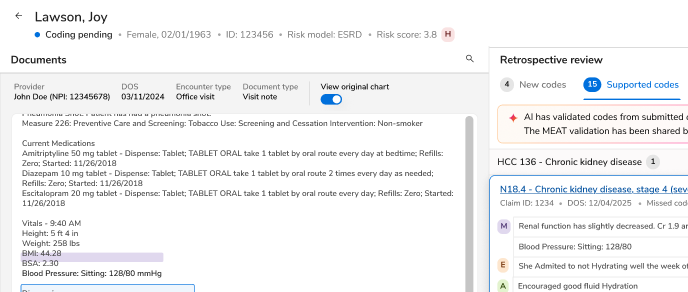

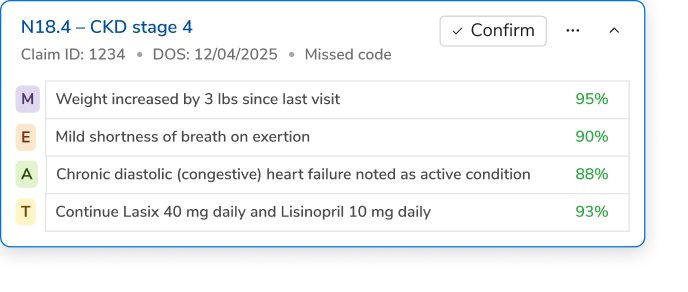

CDS (Clinical Decision Support Systems):

CDS (clinical decision support systems) come in a close with inpatient slightly edging over Ambulatory. Also, EMRs and CDSs have a lot of patient specificity. Across CDSs for acute and ambulatory, though the intent is to improve overall patient care, acute is slightly more on fidelity and less on latency. An example may be an anemia workup in an outpatient setting vs. a cardiovascular surgery procedure, but both checking on platelet counts. Expectations are high for the utility of CDSs in acute care because acute care in hospitals and emergency rooms is the most intensive and expensive part of the healthcare system on a per-patient basis.

Another example is the onset of sepsis. Every hour that passes before sepsis is diagnosed and treated, the risk of mortality increases by 7.6%. Real-time and contextual information is critical here, deeming a low latency requirement.

CDS attributes:

- High Fidelity (inpatient slightly higher)

- Low Latency

- Very high skill set requirements to build/maintain; real-time and contextual

- Finer granularity in the data model

- Slowly Changing Dimensions (SCD) Level 3/6

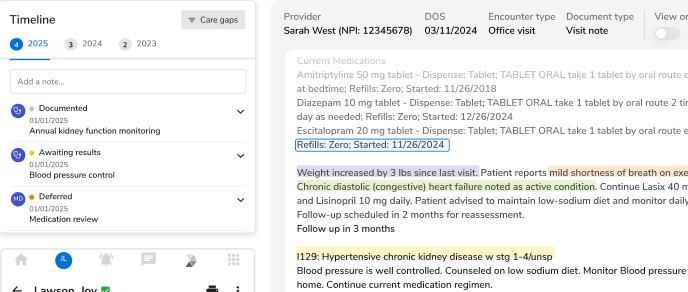

Clinical Operations/Analytics:

Clinical operations in a hospital environment try to foster an environment that is patient-friendly and operationally consistent. Clinical operations software deals with issues around data capture and data reconciliation and much lower fidelity than CDSs’ and EMRs’; increased latency as well as in some form a replicated dataset. The reduction is permissible; the software reduces clinical variability (uniform care across) and enables a productive environment of care. Clinical analytics helps organizations get ahead of demand while maintaining or improving patient outcomes and satisfaction. It helps manage the variation in patient demand to enable better planning and resource usage.

Clinical operations and analytics data enable success in the following areas:

- Appropriate, timely care

- Collaborative, goal-oriented approach to patient-centered care with a direct impact on a health system’s bottom line

- Analyze the opportunity for improvement and identify the operational problem.

- Design and implement interventions and measure results.

- Continuous process improvement

Clinical Operations/Analytics attributes:

- High Fidelity but lower than EMR/CDS

- Relaxed Latency requirements than EMR/CDS

- High Skill set required to replicate and version the data

- Medium granularity required in the data model

- Slowly Change Dimensions (SCD) level 3 will do

Clinical analytics in hospital settings falls somewhere between RCM Ops (discussed next) and Clinical Ops. Clinical analytics may analyze patterns around admission rates based on certain diagnoses to use real-time medical data to generate insights or make decisions. It’s more fidelity-wise closer to EMR data than its RCM cousin.

RCM Operations/Analytics:

RCM (Revenue Cycle Management) Operations lays emphasis on claims/collections/contract management with a laser focus on the dollar amount for net collections and zero (accounts receivable) A/R days. The fidelity around clinical data is greatly reduced; however, the latency is not as much as the data still needs to be recent for financial accounting. RCM Analytics can afford to have much lower fidelity as its intent is more on coding productivity and billing operations to reduce average A/R days rather than clinical activities. Data still needs to be super-relevant but not as time-sensitive. However, data needs to be of utmost quality, as it enables all contract/collection/claim management, which is very necessary for overall process streamlining and revenue realization. RCM locates lost revenue opportunities where a provider is administering a service and not capturing it or not getting paid for it.

The fidelity/latency chasm between RCM Operations and Analytics does merit each having its own detailing.

RCM Operations

- The process of streaming the data to the Clearinghouse/Payers and realizing the contract/claims/collections workflows is important to keep it accurate to the dollar and highly time-bound, deeming it high fidelity and low latency.

- Keeping in sync with payer rosters and clearinghouse metadata to keep these very workflows moving; points to low latency requirements.

Patient payments have become 35 percent of most physician revenues, and mainstream adoption of alternative payment models has made coding and reimbursement much more complex than the classical fee-for-service revenue cycle. Thus, the added emphasis on operations.

RCM Analytics

- Analyzing the coding/collections productivity or the A/R aging reports does not need very high fidelity, and slightly higher latency is sufficient.

- Also determining days to bill, or a transaction detail report for types of transactions based on financial class or transaction type, will do with higher latency data.

Summarizing RCM Operations/Analytics attributes:

- Medium Fidelity with Ops ahead of analytics

- Latency higher than previous categories, with Ops << Analytics

- Skill set required to replicate and author the data for proper corralling of workflows

- Medium granularity is required in the data model; denormalized tables are ok.

- Slowly Changing Dimensions (SCD) level 2 will do

CLINICAL TRIALS-RESEARCH:

Next comes the area of clinical trials/research, where data collections happen over a period of time as part of experiments required for research. This data needs to be relatively clean; however, the fidelity maintained well within the prescribed time period. Clinical trial data stemming from trials performed for drug discovery is furthest away from the EMR on the basis of latency; however, the fidelity is much closer, as the data still needs to be clinically relevant and statistically significant to establish the efficacy of the drug.

Though the latency may be much larger to establish the drug efficacy, the fidelity loss needs to be minimal to establish the veracity of the data and validity of the trial.

Population Health Management/Value-based Care (VBC):

Last but not the least, is the area of Value-based care. Here, the slicing and dicing of data is of paramount importance to healthcare providers to provide optimal care to patients. Also, it provides the right payer-level views for insurance carriers, who may participate in different care protocols to ensure a better quality of life for the patient while ensuring that their deep pockets are also put to better use. A whole range of reporting and information mechanisms are possible here for both the patient, the healthcare provider, and the Payers; thus helping each in their own respective domains. Population health and its analytics may be the lowest with respect to fidelity and highest on latency. This may be tolerable as it does not impact the patient-centric care protocols or the analytics, as the data is sufficient and demonstrates the required value propositions for a value-based care approach. It also implies that the data granularity is the coarsest here in comparison to all the former verticals or categories.

CONCLUSION

The necessary skill sets to build, manage, and maintain data architectures get more complex as the need for higher data fidelity increases. Though data velocity, volume, and variety may have their respective effects, they are managed by technology drivers like asynchronous processing and improved I/O throughput mechanisms. A key thing to note is that the skill/engineering required ramps up considerably as we head to higher fidelity/ lower latency verticals. Verticals like EMR/CDS Inpatient warrant higher data fidelity and also much finer granularity with SCD (Slowly Changing Dimensions) levels like 6. As we walk towards lower fidelity zones like revenue cycle and clinical analytics data entities may be much coarser and possibly even more denormalized. It may be evident that the data model complexity for each of these verticals may grow exponentially as one heads to the origin in Figure (A). It also implies that it needs a much higher level of expertise to corral these models. It may be also noted that models need to encompass business processes/workflows that run the given customer’s business and its related activities.

.png)

.png)

.avif)

.svg)

.svg)

.svg)