From Pilots to Practice: Why Public Health Needs a Governance-first Approach to AI

Public health agencies across the country are increasingly relying on AI models to analyze data from clinics, pharmacies, and community programs to highlight areas with rising prescription gaps or delayed follow-ups. While these insights can help prioritize outreach and improve coordination with providers, many teams struggle with limited oversight of how these models are developed, validated, and monitored.

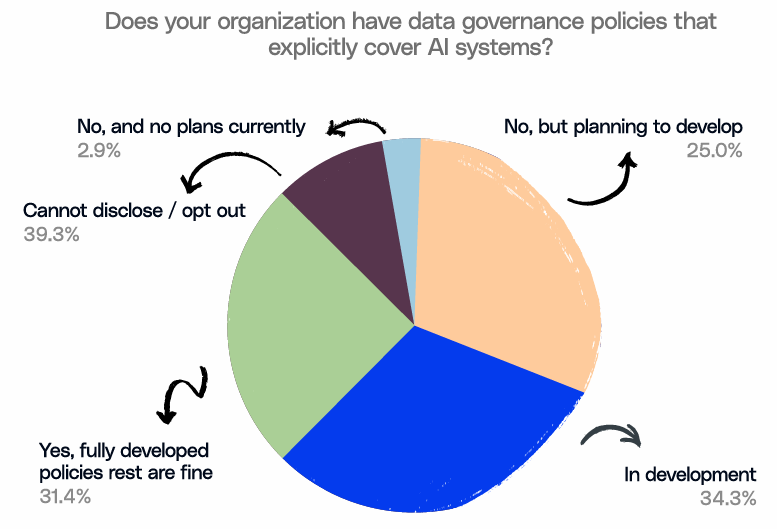

This raises critical questions about transparency and highlights the need for strong data governance frameworks to ensure AI systems are responsibly managed. However, while the adoption of AI accelerates, governance has not kept pace.

This was also highlighted in Innovaccer’s recent survey-based report, "How State and Local Public Health Leaders Can Promote AI and Data Governance." The report cited that AI is advancing more quickly than governance within US public health agencies, which could affect trust, equity, and sustainability for the long term unless governance frameworks and workforce training are enhanced.

Let’s take a closer look at what this imbalance means for public health and why a governance-first approach is key to moving from pilots to real-world practice.

The Adoption: Governance Paradox

While enthusiasm, optimism, and energy for using AI across the country by local health agencies have gained traction, this survey brings about a stark reflection of ambition and readiness. Nearly 85% of departments reported using some form of AI, and more than half launched pilots just in the past year. This clearly signals momentum and modernization.

This imbalance between rapid experimentation without consistent oversight creates uneven standards and potential risks. As the report notes, enthusiasm for innovation must be matched with structured accountability if counties want to translate pilots into sustainable, safe practice.

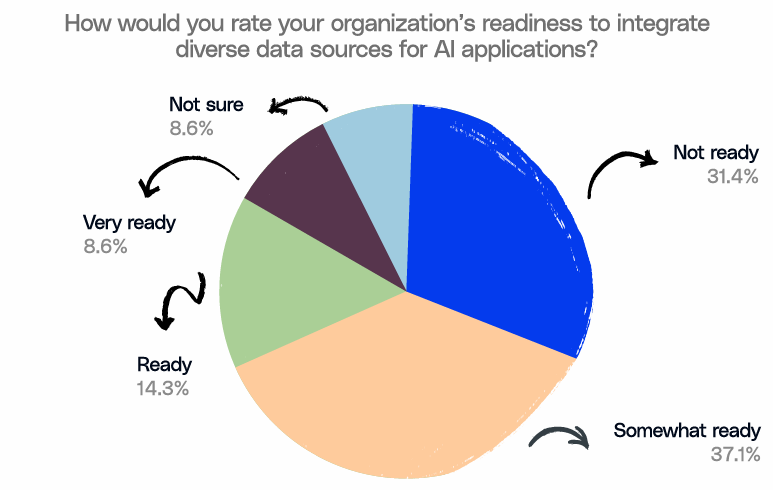

Readiness Exists, But Gaps Remain

As mentioned earlier, public health agencies are eager to adopt AI, but enthusiasm alone doesn’t guarantee readiness. The survey found that over 75% of leaders rated their organizations’ AI literacy as low to moderate, and 65% said they were not fully prepared to integrate diverse data sources, a critical foundation for effective AI use.

Still, there’s room for optimism. About two-thirds (65%) of departments already have staff or consultants dedicated to data architecture and modernization. The capability exists. It just needs alignment between training, governance, and investment to turn readiness into real progress.

What’s Driving AI Adoption at the Local Level

The growing interest in AI across local health departments isn’t surprising. Many teams are trying to modernize outdated systems and manage workforce challenges,

In that spirit, agencies are already putting AI to work in meaningful ways. They are:

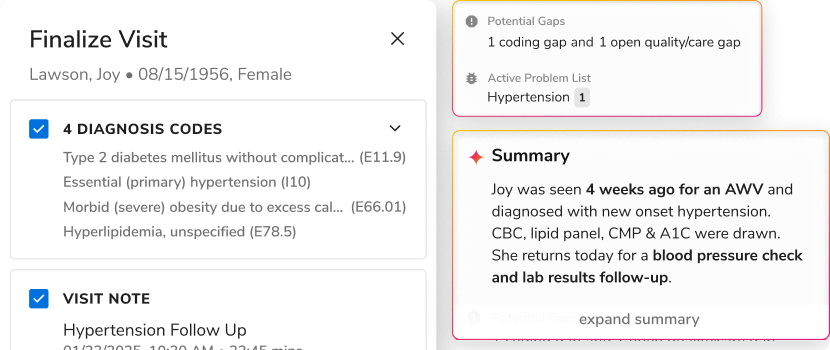

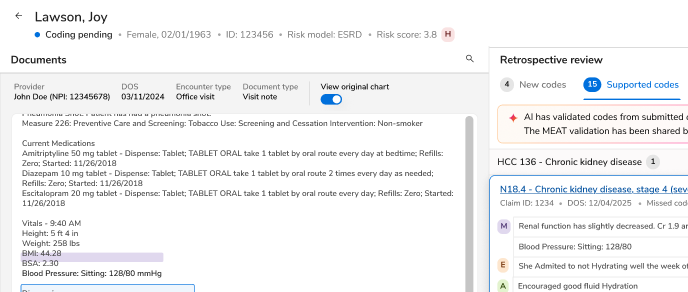

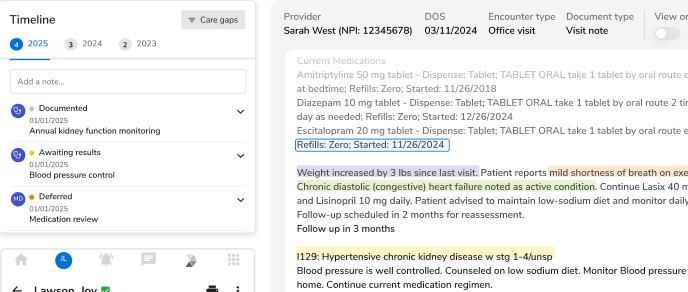

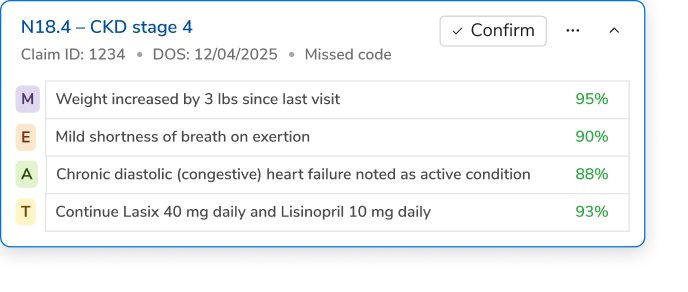

- Connecting fragmented data from clinics, pharmacies, and community programs to get a clearer picture of population health.

- Using chatbots and digital assistants to answer public queries faster and free up staff time.

- Turning to predictive analytics to identify high-risk populations early and plan interventions more effectively.

A Roadmap Forward

Before scaling pilots, agencies should ensure their foundations are strong. Dr. Anil Jain, Chief Innovation Officer at Innovaccer, recommends a five-step AI Readiness Checklist to guide public health departments from experimentation to accountable adoption:

- Define the right use cases: Identify specific public health goals where AI can drive measurable impact, such as predicting chronic-disease risk or improving outreach efficiency.

- Evaluate ethical, legal, and social implications: Review bias and privacy concerns early to safeguard equity and community trust.

- Assess data and infrastructure readiness: Ensure interoperable, high-quality, and securely governed data before deploying any AI tool.

- Vet vendors and technologies: Choose partners whose models are validated, explainable, and aligned with public health values.

- Plan for sustainable implementation: Build governance boards, train staff, and set processes for monitoring, retraining, and accountability over time.

Turning Readiness into Resilience

Developing resilience in public health AI begins with changing the discussion from the capabilities of technology to the responsible use of it. Governance provides that compass by ensuring innovation is aligned with ethics and public accountability. When health departments view governance as an enabler instead of an afterthought, pilot projects can become long-term programs that families and communities trust. Ultimately, responsible AI is not about having cooler technology, but creating stronger systems that are able to accomplish equity and earn trust through every decision.

Learn how your agency can build a robust governance foundation. Read the report now.

.png)

.png)

.svg)

.svg)

.svg)