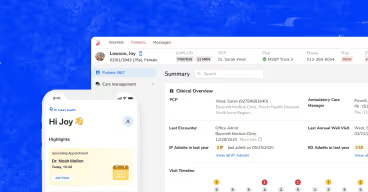

Innovaccer AI : Boosting Healthcare Transcription with Cutting-Edge OCR Optimization

.png)

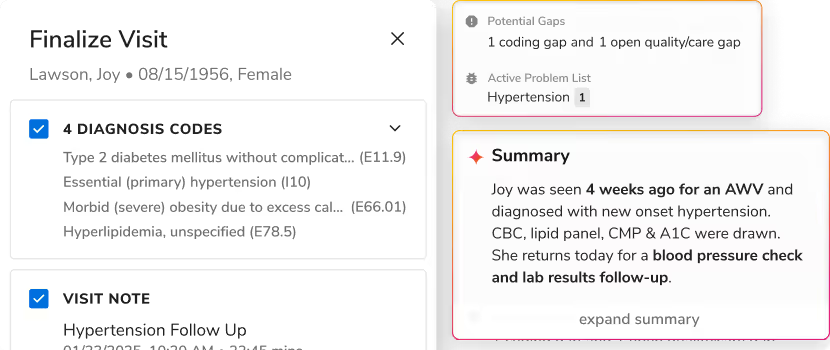

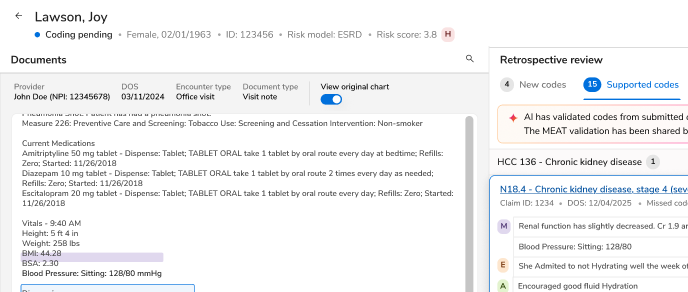

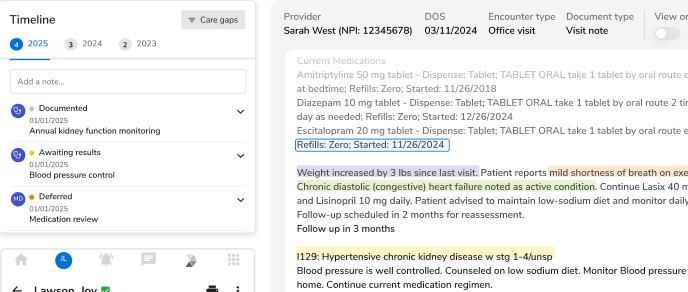

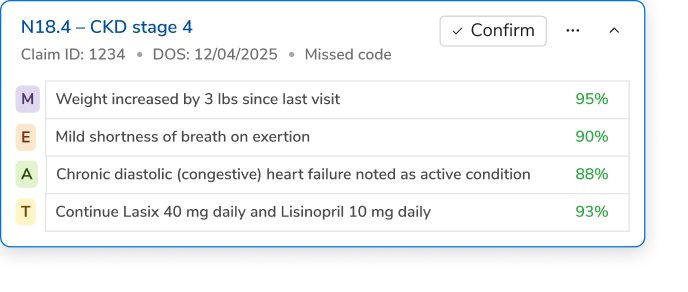

Accurate and efficient transcription of healthcare notes is foundational for quality patient care, regulatory compliance, and streamlined workflows. However, Optical Character Recognition (OCR) technology faces significant challenges in healthcare documentation—complex layouts, diverse fonts, handwriting variations, and the urgent need for real-time processing.

At Innovaccer, we have embraced PARSEQ, a cutting-edge OCR architecture that delivers superior recognition accuracy compared to leading models like CRNN, ABINet, TRBA, and ViTSTR-S. PARSEQ’s advanced encoder-decoder design enables exceptional text recognition—crucial for accurately capturing complex clinical notes, prescriptions, and diagnostic reports.

But high accuracy often comes at the cost of increased computational complexity and latency, limiting real-time deployment in busy healthcare settings. To overcome this, our team embarked on a rigorous optimization journey leveraging NVIDIA’s TensorRT and TRITON Inference Server, aiming to achieve real-time OCR processing without sacrificing accuracy.

Understanding the Challenge: Balancing Accuracy and Latency

Initial deployment of PARSEQ on TRITON revealed limited throughput (around 6–8 requests per second) and response times between 5–7 seconds per inference, far from the sub-second speeds required for clinical environments. GPU utilization was near 94%, highlighting heavy resource demand and underscoring the urgent need for optimization.

A significant obstacle emerged from a compatibility issue during TensorRT conversion related to the model’s Squeeze operation—a tensor reshaping function implemented dynamically without fixed axis parameters. TensorRT requires static operations for acceleration, and this mismatch triggered conversion failures.

Innovative Solutions for TensorRT Optimization

To tackle this, Innovaccer’s engineering team explored three main strategies:

1. Removing the Squeeze Operation: Replacing it with a Reshape operation, though this introduces tensor dimension mismatches downstream, disrupting model integrity.

2. Creating a Custom TensorRT Plugin: Designed to mimic the dynamic Squeeze behavior. While successfully compiled, TensorRT’s native plugin precedence caused the custom plugin to be bypassed, resulting in repeated errors.

3. Making Squeeze Static: Explicitly defining the axis parameter for the Squeeze operation to enforce static behavior. This required modifying the ONNX model and replacing attribute-based axis specification with a constant tensor input, aligning with TensorRT’s expectations.

The third approach ultimately succeeded, enabling full TensorRT engine generation with dynamic batch size support—crucial for balancing throughput and latency in clinical workflows.

Real-World Performance Gains

Post-optimization benchmarks were transformative. The TensorRT-accelerated PARSEQ model achieved over 20 requests per second with inference times consistently below one second—a massive improvement over the initial deployment.

Further enhancements came from switching from HTTP to gRPC for model serving, leveraging efficient binary protocols and persistent connections. This boosted throughput to around 23 requests per second with 40 concurrent users, solidifying PARSEQ’s readiness for high-demand healthcare environments.

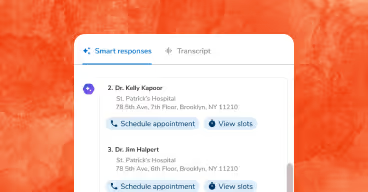

What This Means for Healthcare

- Real-time Transcription: Clinicians and care teams can rely on near-instantaneous, accurate digital transcription of handwritten and printed notes, improving documentation speed.

- High Accuracy with Low Latency: The optimized PARSEQ model balances precision and performance, essential for complex healthcare documents with varied layouts and fonts.

- Scalable Deployment: Integration with NVIDIA’s TRITON and TensorRT platforms ensures flexible, scalable deployment across diverse healthcare IT infrastructures.

- Foundation for Ambient AI: Fast, reliable OCR transcription feeds downstream AI workflows—like clinical coding, decision support, and patient engagement tools—accelerating innovation in healthcare delivery.

Innovaccer’s work with PARSEQ and TensorRT exemplifies how thoughtful engineering can break traditional trade-offs between accuracy and speed, unlocking new possibilities for digital healthcare transformation.

.png)

.png)

.avif)

.svg)

.svg)

.svg)