Your Million-dollar AI Failed Because You Built It Backwards

.png)

In December 2000, Daily Mail ran a news piece titled: "Internet may be just a passing fad as millions give up on it." The article argued that the future of online shopping is limited and email is causing an overload of information.

.png)

Today, that skepticism seems quaint.

Companies that considered the internet as an unjustifiable infrastructure spent the next decade playing catch-up.

Yet here we are in 2024, making the exact same mistake with healthcare AI.

We're treating transformative infrastructure like decorative add-ons, implementing tools in isolation, and wondering why our clinical teams are more burned out than ever.

For example, let’s take a peek into a busy care manager’s workday.

Maya, a care manager at a 200-bed hospital, uses three AI tools daily. One generates risk scores. Another manages scheduling. A third handles care notes. Three "revolutionary" AI tools that can't access each other's data.

When the risk tool flags a stable patient as high-risk for the third consecutive day, Maya knows the patient is fine. The AI doesn't. It can't see the care plan updates sitting in a different system.

This is what $2.3 million in AI investment looks like when you build backwards.

The Expensive Delusion About AI in Healthcare

Healthcare leaders face a stark choice: master AI as infrastructure or drown in a sea of disconnected tools amongst regulatory compliance and policy changes.

The Congressional Budget Office projects 7.8 million patients will lose Medicaid coverage by 2034. A 2024 report from Google Cloud and The Harris Poll shows clinicians spent 28 hours per week on paperwork, with 82% reporting burnout.

The stakes extend beyond operational efficiency. CMS quality measures increasingly tie reimbursement to care coordination metrics.

Healthcare systems need AI that addresses these interconnected pressures, not creating new ones.

A 2024 JMIR review of 38 hospital systems confirms what Maya experiences daily: AI implementations often create more manual work and alert fatigue rather than relief. Yet hospitals keep buying more disconnected tools, hoping the next one will finally deliver the promised transformation.

It won't. Not because the technology fails, but because the sequence is backwards.

Care delivery follows a logical progression. A patient schedules an appointment. Checks in. Sees a provider. Receives care. Gets preventive outreach.

Your AI strategy must follow this same progression, or it creates chaos instead of clarity.

Most organizations do the opposite. They start with predictive analytics before fixing documentation. They buy population health dashboards while nurses still transcribe notes manually.

The Three-wave Sequence That Works

After working in US healthcare tech for a decade, we've learned that most AI failures stem from two core problems: unstructured data and lack of interoperable systems. Without clean data, true interoperability is fantasy. Without interoperability, AI is just expensive noise.

So where is the beginning? Not where vendors tell you. Not where your board wants.

It's where every patient journey starts: your front office. From there, you advance to the care team, then scale to population health.

Get the sequence right, and you achieve what disconnected tools never could: lasting relief from administrative burden, true interoperability, and performance gains.

What follows isn't just another framework. After watching enough multi-million dollar failures, we know it's the only sequence that works.

Wave 1: Free the Front Line

Your receptionist drowns in phone calls. Your nurse documents the same information three times in three different systems. Your physician types notes during dinner.

These aren't efficiency problems. They're data problems. And until you solve them, every AI tool you buy is just an expensive add-on.

Start with ambient scribes that capture intake conversations and clinical visits. Automated systems that schedule appointments and send personalized reminders. EMR writebacks that eliminate duplicate entries.

These aren't the AI capabilities that make headlines. Your board won't celebrate it. But they create what everything else depends on: clean, structured, real-time data.

Wave 2: Empower the Care Team

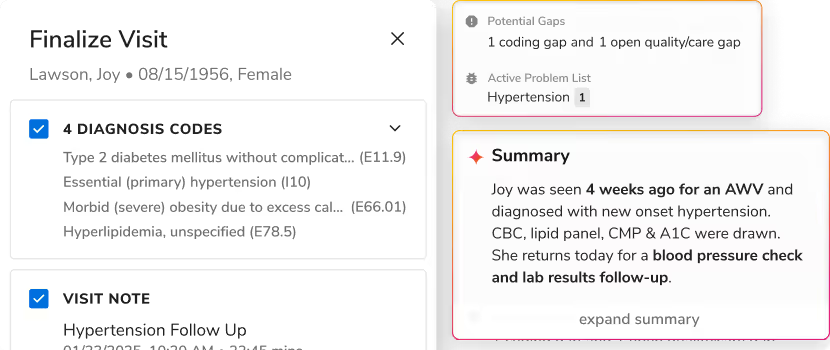

Only after your foundation systems create clean data can AI actually coordinate care.

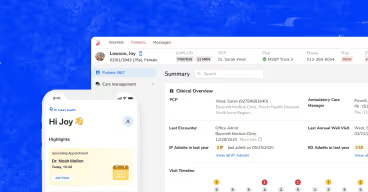

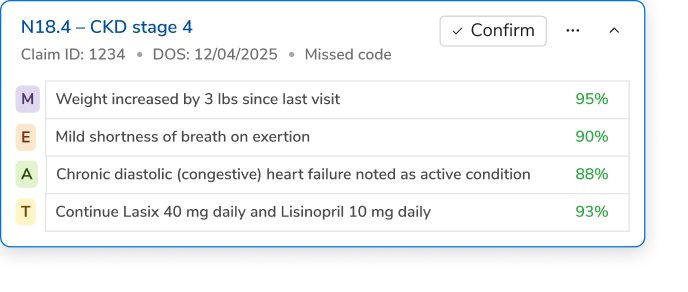

Picture Maya's Tuesday after Wave 1 implementation. A diabetes patient checks in for routine follow-up. As Dr. Chen enters the exam room, her tablet displays real-time insights: the patient's home glucose readings from last week, medication adherence data from the pharmacy, and a care gap alert for overdue eye screening.

Dr. Chen addresses the screening during the visit instead of discovering the gap three months later in a quarterly report. The patient schedules the appointment before leaving. No follow-up calls. No care coordination meetings. No care gaps falling through cracks.

This is what Wave 2 looks like: AI that works within existing workflows rather than creating new ones. Risk scores that update with every data point, not batch processing overnight. Care teams that see the same information simultaneously, not through fragmented reports.

Wave 3: Activate Predictive Intelligence

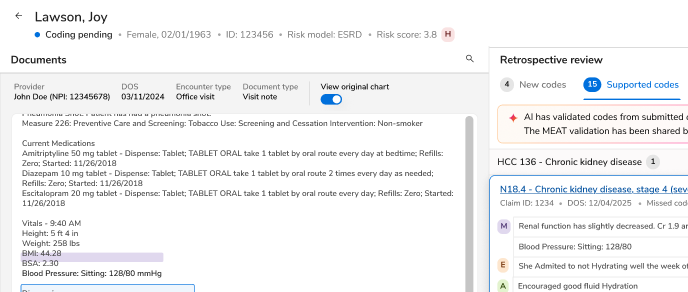

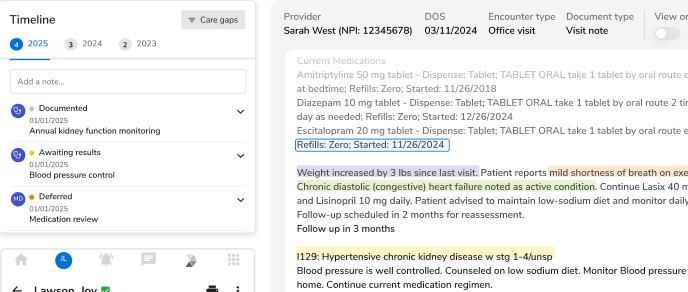

Six months later, Maya's Thursday looks entirely different. Her dashboard shows 847 patients under her care, but AI identifies the 23 who need immediate attention. A 65-year-old diabetic hasn't filled his insulin prescription in five days. A heart failure patient's home monitoring shows subtle weight increases that predict readmission risk within 72 hours.

Maya reaches both patients before symptoms worsen. The diabetic patient had insurance changes he didn't understand. The heart failure patient needed medication adjustment, not emergency care. Two potential readmissions prevented.

This is Wave 3: population intelligence that works because Waves 1 and 2 created the foundation. Maya's AI doesn't just predict problems. It prevents them.

But if your blood glucose readings are three weeks old, if medication data sits trapped in pharmacy silos, if social determinants remain undocumented, then your sophisticated population health management tool predicts nothing useful. It's a Ferrari engine in a broken car.

Organizations aiming to reduce preventable readmissions shouldn't start with prediction algorithms. They should start with documentation, progress through coordination, then make population intelligence powerful.

The Platform Question Every Vendor Avoids

Maya's three tools represent healthcare's most expensive lie: that you can achieve integration through accumulation. Each new point solution promises to solve one problem brilliantly. Each requires separate training, distinct workflows, and manual bridges. The burden compounds.

The uncomfortable truth: three integrated capabilities beat ten isolated ones. Every time.

This realization shaped how we built Gravity by Innovacer, focusing on healthcare interoperability from the ground up. Instead of building another point solution, we developed an infrastructure where documentation feeds coordination, coordination feeds population health, and insights flow without friction.

But the platform question isn't about us or any single vendor. It's about rejecting the accumulation trap entirely.

Your Choice Is Binary

If your front line is drowning, your AI strategy is fiction.

That's the choice every healthcare leader faces now: build AI as infrastructure from the ground up, or keep adding expensive band-aids to broken workflows.

The question isn't whether your competitors will make the right choice. It's whether you'll make it first.

.png)

.png)

.avif)

.svg)

.svg)

.svg)