Breaking the Documentation Barrier: What QA Leaders Are Saying About the Future of AI-Powered Grooming

Introduction

Software development teams are facing a critical turning point where two powerful trends—AI and collaborative tooling—are converging to reshape how requirements are captured, documented, and translated into test coverage.

While engineering leaders acknowledge progress in improving agile practices and backlog refinement, the challenge of reliably documenting everything that comes up in fast-paced grooming sessions remains stubbornly persistent. Misunderstandings, undocumented changes, and incomplete test coverage can all slip through the cracks.

The Current Landscape: Progress, Yet Familiar Risks

Lokesh Agrawal, a QA Director at Innovaccer, has spent over a decade in the industry and watched agile adoption transform the way teams work. But he still sees familiar pain points when it comes to grooming sessions.

“We’re far better at backlog refinement than we were ten years ago. But honestly, those important clarifications that come up live in meetings? They still get lost,” Lokesh explains.

His teams struggle with scattered notes and inconsistent documentation processes. Despite sophisticated tools like Jira and Confluence, there's a gap between the conversation and the artifacts the team actually uses.

“You can have the best user story template in the world, but if nobody remembers to update it with what was discussed in the meeting, it's worthless.”

Instead of expecting people to be perfect note-takers, QA leaders are exploring a new mindset: using AI-powered tools to automatically close that documentation gap.

The AI Documentation Paradox: Promise vs. Reality

Mohammad Azam, Engineering Manager at Innovaccer, describes the enthusiasm—and frustration—around AI tooling for agile teams:

“There are AI transcription tools everywhere. But just dumping a transcript isn’t the answer. It’s not actionable.”

Azam points out that successful meeting transcription is only the first step. The real goal is turning those raw words into structured, test-ready insights.

“It’s like having a voice recorder for requirements. Helpful? Sure. But you still have to do the work of figuring out what's important, what's noise, and how it affects your test cases.”

This highlights a critical gap in many "meeting note" solutions. While they can capture words, they rarely help understand or integrate them into the QA process.

Beyond Basic Notes: The Contextual Challenge

Transcribing meetings is easy; making sense of them in context is hard.

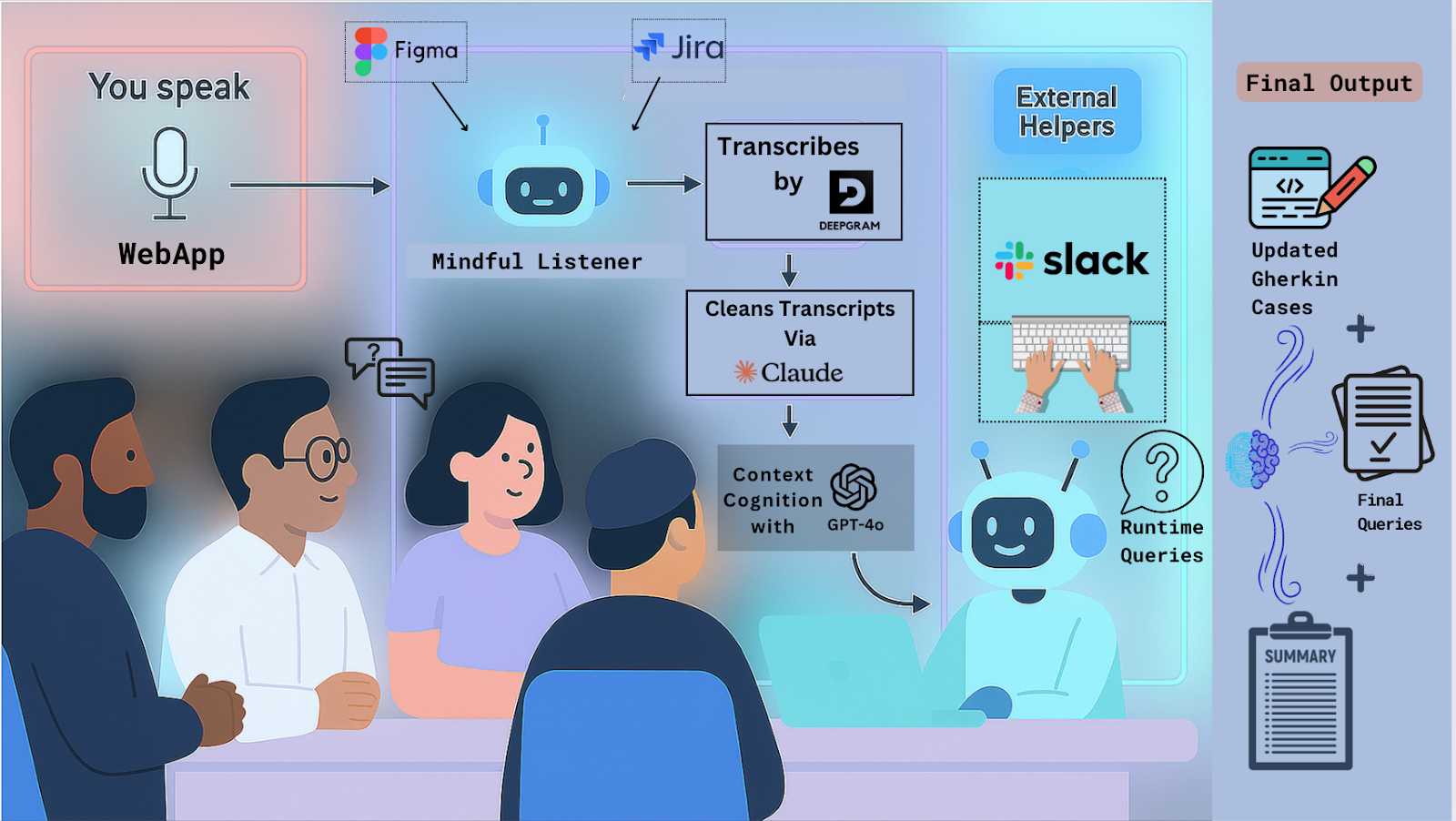

That’s why teams are turning to multi-source AI integrations that combine:

- Live meeting transcriptions using DeepGram, which provides high-accuracy speech-to-text conversion in real time.

- User stories and acceptance criteria from backlog management tools like Jira, ensuring direct linkage to development plans.

- UI/UX designs and Figma prototypes for design context.

- Current test cases or automation suites to keep coverage up-to-date.

By aggregating these sources, the AI can understand the full scope of discussions and identify impacts across requirements, design, and testing.

“We needed something that didn’t just spit out text,” Lokesh explains. “It had to know how the conversation changed the story or introduced new edge cases.”

Smarter Analysis: From Transcripts to Test Cases

This is where advanced natural language processing (NLP) and generative AI models come in.

The system typically uses:

- Claude (for summarization and signal extraction) to filter meeting noise and focus on key points.

- GPT-4o (for reasoning and test artifact generation) to interpret context, suggest queries, and propose updated test cases.

QA teams are experimenting with solutions that:

- Filter noise: Distinguish between side talk and meaningful requirement changes.

- Spot ambiguities: Flag unclear points for clarification.

- Suggest queries: Automatically generate questions to ensure edge cases are addressed during the meeting itself.

- Update test cases: Propose changes or additions in real time.

“It’s not just capturing. It’s coaching,” Azam notes. “It asks the questions a good tester would ask.”

For example, these tools can generate automated queries at regular intervals during grooming, helping teams catch and clarify requirements before they become defects.

Real-Time Impact: From Conversations to QA Artifacts

When this workflow works well, the transformation is profound.

Instead of relying on memory or fragmented notes, the system automatically:

- Transcribes meetings using DeepGram.

- Filters and summarizes with Claude.

- Generates and updates test cases using GPT-4o.

- Identifies requirement changes for user stories in Jira.

- Creates new test cases where gaps exist.

- Produces meeting summaries with flagged questions and next steps.

- Pushes artifacts directly into backlog and test management tools.

This turns informal, unstructured discussions into structured, actionable assets for the entire team.

Policy and Process: The Integration Imperative

While the technology is critical, QA leaders stress that the organizational process must evolve too.

Lokesh emphasizes:

“You can have the best AI in the world, but if your team doesn’t integrate it into the workflow—if people don’t review the generated artifacts or answer the flagged questions—it fails.”

Successful rollouts involve:

- Clear ownership of AI-generated test case reviews.

- Defined governance for approving updates.

- Seamless integration with Jira, Confluence, and test management systems to avoid duplication or manual syncing.

Implications for QA Leadership

These QA and engineering leaders highlight essential lessons for adopting AI-powered grooming solutions:

- Context matters more than capture: Raw transcripts alone are insufficient. Value comes from integrating and interpreting multiple sources.

- AI requires governance: Tools must be embedded in a broader process of review and accountability.

- Continuous, real-time analysis: Automated query generation ensures clarity during the session, not after.

- Integrated workflows: Direct linkage with existing backlog and test management tools maximizes impact.

- Improved test coverage = reduced risk: Better documentation leads to fewer bugs and surprises downstream.

The insights shared by Lokesh, Azam, and others underscore a key reality: The future of QA depends not just on better automation, but on using AI to ensure requirements are fully understood, properly documented, and rigorously tested—from the very first conversation.

Organizations that master this will deliver software that truly meets user needs, with fewer costly errors and delays.

.png)

.avif)

.svg)

.svg)

.svg)